Legendary Computer Scientist Donald Knuth.The 'K' of the KMP"People think that computer science is the art of geniuses but the actual reality is the opposite, just many people doing things that build on each other, like a wall of mini stones."-Knuth

I never really gave a thought about string matching unless of course i opened the 1313 pages Coreman ebook and pressed Ctrl+f to search for “String Matching” :P.when you discover things like these you automatically start looking for it.So the next time i used the search option to look for a mail or used the find and replace button or when i wondered what page rank would do if there was never really an algorithm for searching strings,the KMP was always on my mind :-)

String matching the word says it all.It is simply finding occurrence of a pattern in a given text.why do we need this?Other than the things already said String Matching also finds its application in identification of patterns in a DNA sequence.

They say a problem well formulated is half solved.Keeping the solving part aside for while lets concentrate on the formulating part.We assume that the text is an array $T (1 . . n)$ of length $n$ and that the pattern is an array $P(1 . . m)$ of length $m$.

And we say pattern P occurs with shift $s$ in text T if

$P[1 .. q] match T[s+1 . . s+q]$

If P occurs with shift $s$ in T , then we call $s$ a valid shift,otherwise we call $s$ an invalid shift. The string-matching problem is the problem of finding all valid shifts with which a given pattern P occurs in a given text T

For most of the problems there is always a naive method of solving it.One can call it The Brute Force.But why call it naive?Its just the easiest way out & necessarily(most of the time :P) not the best.So using this method if we have to find the occurrence of the pattern,we check for all possible shifts.After each shift, we one by one check characters at the current shift until a mismatch occurs and if all characters match then prints the match.

Google goggles

Say the pattern here is “goggles”

We can easily instruct the computer to do the following

Shift 1-

Google goggles

Goggles

Shift 2-Google goggles

goggles

Shift 3- Google goggles

goggles

Shift 4-Google goggles

goggles

Shift 5- Google goggles

goggles

Shift 6-Google goggles

goggles

Shift 7- Google goggles

goggles

Shift 8-Google goggles

goggles

Thus we say the pattern occurs at shift 8.

So what are we actually doing?Simply trying to match the pattern for all possible shifts.The algorithm is simple enough

NAIVE-STRING-MATCHER (T,P)

1. $n = T.length$ // Store the length

2. $m = P.length$

3. $for 0 to n-m$ // no of shifts

4. if $P (1 .. m) == T (s+1 .. s+m)$

5 .Print “pattern occurs with shift”,s

Just take a moment and think here.What can be the worst case?If you cant think of the worst case then take this example.Modifying the first example a tiny bit

T=Google google

P=Googles

In this case only the first shift changes.During the first shift you happily go on comparing when char after char matches only to be disappointed in the end when the last char turns out to be a mismatch(s and space are matched)*Damn!*.We can thus conclude The Naive pattern searching algorithm doesn't work well in cases where we see many matching characters followed by a mismatching character.

Hence in worst case we go through all the shifts which is $n-m+1$ & in each shift we end up comparing all the characters which is $m$.Hence the time complexity of this algorithm is $O((n-m+1)(m))$

In 1987 when two computer scientists fresh from their Turing Award looked at this problem they managed to give an altogether different approach.Their main idea was to represent the data in a different way.They used powerful computer science techniques like pre processing & hashing today.They converted both the pattern & and the sub-strings of the text into decimal numbers.The task of string matching would be then to simply check the equality between the numbers.It used to match the hash value of the pattern with the hash value of current sub-string of text, and if the hash values match then only it starts matching individual characters.Though the problem seemed to be simplified after the preprocessing,the main hinge was the amount of time taken in generating the hash values for the sub-strings of the text.Nevertheless its time complexity was better in average cases though it turned out to be same as the Naive method in the worst case scenario.

How can we say there is scope for improvement from the naive method?

From our example,we know that in shift 1 the first two characters “Go” match,can u not say confidently that shift 2 wont work.And this was just a small example.When dealing with thousands of words to match a pattern you can imagine the information wasted when a mismatch occurs using this method.The question that pops up now is whether it is possible to skip some shifts when we are confident enough from the previous shifts that it wont work.

You must have heard the proverb “The only real mistake is the one from which we learn nothing” or in this case it should be ”The only real mistake is the one from which we learn nothing from a mismatch:P”.

It is this ingenious idea that lead to what we call as KMP.

First published by Donald E. Knuth, James H. Morris and Vaughan R. Pratt, : “Fast Pattern Matching in Strings.” In SIA Journal on Computing.

So how do we go about formulating this idea?What they did was just this.Given that pattern characters P[1 .. q] match text characters T[s+1 .. s+q]

what is the least shift s' > s such that for some k < q,

P[1 .. k]=T[s'+1 .. s'+k]

You first take the pattern and calculate the value for 'k' for all cases like,if first char matches,first two char matches,first three matches and so on till all the char matches.Now we need to store this information for reference.Since we are not going to modify the data and access should be easy,we go for the array data structure.To make it appear more refined,lets call this array the prefix function.come on lets calculate the prefix function for the following.

P=Goggles

A[1]=Case where the first character matches.So we just shift by one pos

$s-k=1$

$1-k=1$

$k=0$

A[2]=The first 2 char “Go” matches.Now we are sure that shifting by one position wont work as G will be matched with o

$2-k=2$

$k=0$

A[3]=when “gog” matches.though shifting by one wont work,shifting by 2 may as it matches g with g

$3-k=2$

$k=1$

Similarly,

A[4]=1

A[5]=0

A[6]=0

Formulating the previous example(according to the algo below)

Shift 1- Google goggles

goggles

2 characters match-A[2]=k=0

shift by $q-k$ position,where $q$ is no of characters matched

2-0=2 positions

Shift 2-Google goggles

goggles

no characters match.simply move to next.

Shift 3-Google goggles

goggles

1 character has matched.and A[1]=0

1-0=1

shift by 1

Shift 4-Google goggles

goggles

no characters match.move next.

Shift 5-Google goggles

goggles.

Shift 6-Google goggles

goggles

And we are done :)

We can infer one thing.That the best case is when $k$ turns out to be 0.That is say $q$ characters have matched before the mismatch and $k$ turns out to be zero.Then we simply skip shifts from s,s+1......s+q.Look at shift 1 for instance in the above example.

Though given the trivial example above one may argue both methods being the same.But imagine the larger scenario where in we skip all wasteful shifts.

There are essentially two parts in the Algol.One to do the necessary preprocessing & the other to the matching task.

KMP-MATCHER (T,P)

1.$n$ =$T.length$

2.$m$=$P.length$

3.A=COMPUTE - PREFIX -FUNCTION(P)// Returns the array containing value of k

4 $q =0$

5 for i = 1 to n / / scan the text from left to right

6 while q > 0 and P[q+1] \neq T[i]

7 $q=A[q]$ / next character does not match

8 if P[q+1] == T[i]

9 q=q+1 / next character matches / is all of P matched?

10 if q == m

11 print “Pattern occurs with shift” i m

12 q=A[q] / look for the next match

COMPUTE -PREFIX-FUNCTION(P)

1 $m = P.length$

2 let A[1 .. m] be a new array

3 $A[1]=0$

4 $k = 0$

5 for q = 2 to m

6 while k > 0 and $P [k+1] \neq P[q]$

7 k=A[k]

8 if P[k+1]==P[q]

9 k=k+1

10 A[q]=k

11 return A

In the first one we taken the pattern and compute the value needed.This is done in O(m) time.

Give a thought about the second component.We learn from each mismatch & shift accordingly.what happens when the worst Case scenario in naive method occurs.That is,almost all but last character in the pattern matches.We shift by the ideal amount without wasting time over use less shifts.Thus matching can be done in at most O(n) time

Total time complexity=O(m+n) compared to the worst case in naive method i.e O(mn)

Reducing from the quadratic to a linear time!!! Isn't that awesome:-)

A great idea and the world follows.Thats just what happened here.If you think even you would have thought that way,then go,there are plenty of research problems out there waiting to be solved and if you succeed,who knows i may end up blogging about your algorithm too one day:)

Nikitha Shenoy

Include the link to the paper that you have quoted....

ReplyDeleteStarting your blog with a picture of Knuth with a quote below...wonderful..:):) its different..;):)

ReplyDeleteLater begining from 'what is string matching' ,you smoothly moved onto the naive algorithm for it, and then by explaining its drawback by a *damn* good [;)]example,you moved onto KMP algorithm..that was very well composed with a beautiful drift in the concepts...:):) great job there..:):)

Your modification of the proverb into ”The only real mistake is the one from which we learn nothing from a mismatch:P” is too gud..:)

A very nice example chosen and fantastically explained it by highlighting the matched strings in pattern and text..very clear:):)

It would have been good if you had explained the example for KMP too in the same way by comparing and highlighting..had a little confusion there..:):)

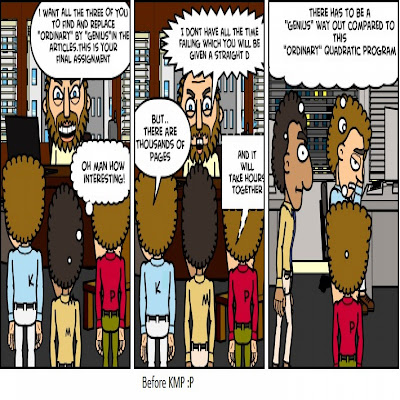

Other than that its an excellent explanation of kmp..:):)and comic saying 'before kmp' was a great idea..:):)Everything is neat and superb..:):)

Donald Knuth's image is very inspiring! "What would page rank without such an algorithm?" excellent thought.

ReplyDeleteThe example of "google goggles .." is too good.

The process of taking from brute force to the improvised algorithm ( quadratic to linear!!)was very neat.Hats off to the comic.

"there are plenty of research problems out there waiting to be solved and if you succeed,who knows i may end up blogging about your algorithm too one day:)" a very nice way to encourage readers.

A realistic start :) The 'K' of the KMP --- creative :) Reminds me of the string matching algorithm that we studied in Anany-Levitan :P

ReplyDeleteBrute force method for string matching explained very clearly.:)

Lol..awesome comic strip :D 'Reward mechanism' led them to find a new method altogether huh? ;)

Explain with an example for KMP too..:) does it recognise sub-strings? :)

A nice end..:)

All in all a creative blog :)

Phrase of the blog :A great idea and the world follows :)

the example for the naive algorithm is very well explained with the example. can you please do the same for KMP?

ReplyDelete@madhusudhan- Done:)

ReplyDeletethe appropriate bold chars. highlighting was extremely impressive... example narrating the KMP MATCHER and COMPUTE PREFIX would have made this blog really simple to understand and cool :D

ReplyDelete*a graphic example....

ReplyDeleteis your algorithm anyway similar to HORSPOOL'S ALGORITHM?

ReplyDelete